Summary

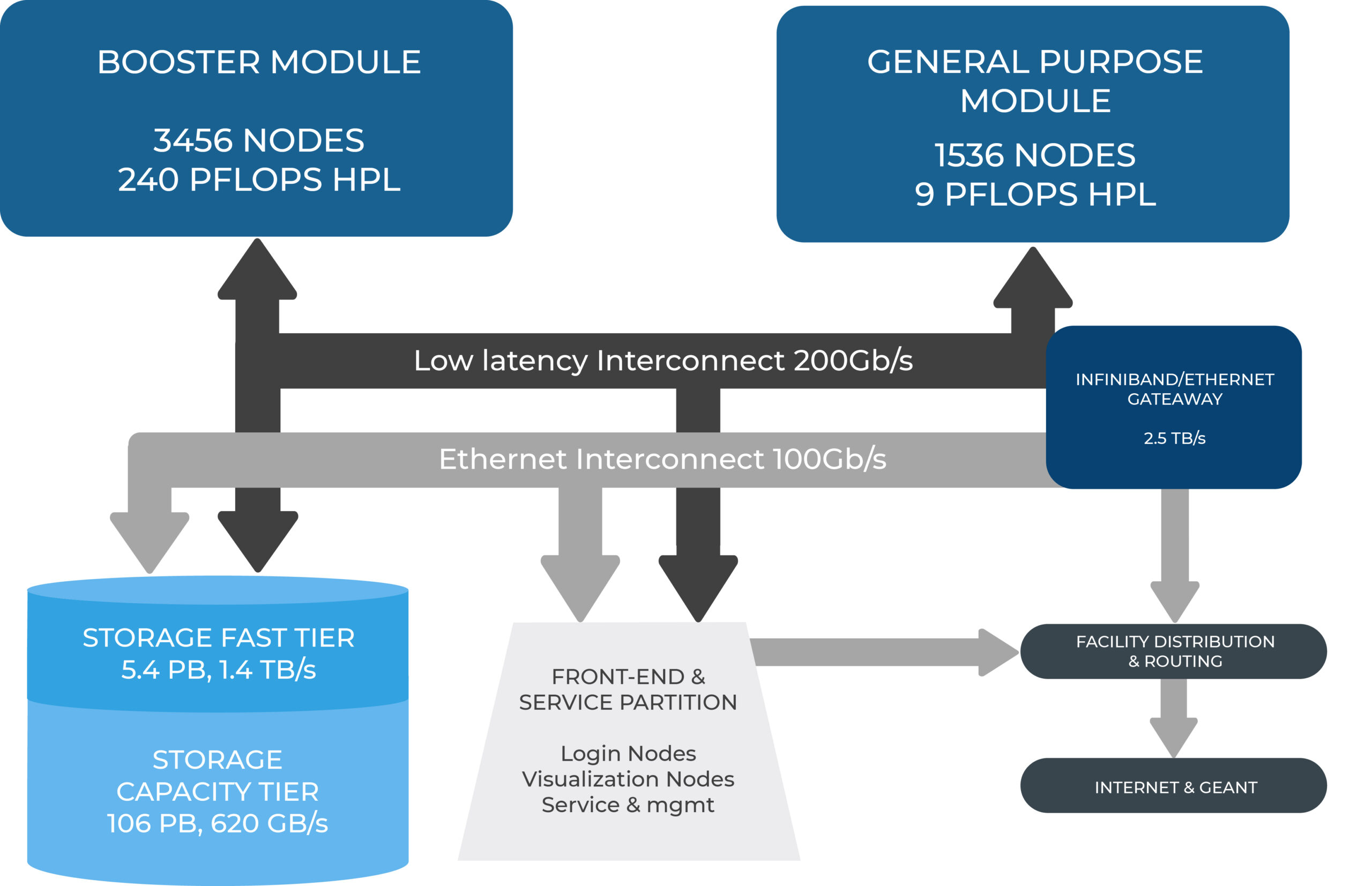

Leonardo is a step forward towards providing pre-exascale computing capabilities to researchers across Italy and Europe. Leonardo aims at maximum performance and can be classified as a top tier supercomputing system in Europe.

The system combines the most advanced computing components to be able to address even the most complex computational workflows, involving both HPC and AI.

Leonardo system is capable of 240 petaFLOPS and equipped with over 100 PB of storage capacity.

hardware overview

Computing Partitions

Leonardo provides to users two compute modules:

- a Booster module, which purpose is to maximize the computational performance. It was designed to satisfy the most computational-demanding requirements in terms of time-to-solution, while optimizing the energy-to-solution. This result is achieved with 3456 computing nodes, each equipped with four NVIDIA A100 SXM4 64GB GPUs driven by a single 32-core Intel Ice Lake CPU.

- A Data Centric General Purpose (DCGP) module aiming to satisfy a broad range of applications. Its 1536 nodes are equipped with two Intel Sapphire Rapids CPUs, each with 56 cores, and reaches over 9 petaFLOPS of sustained performance.

All the nodes are interconnected through an Infiniband HDR interconnect network organized in a Dragonfly+ topology.

3456 nodes (13824 GPUs)

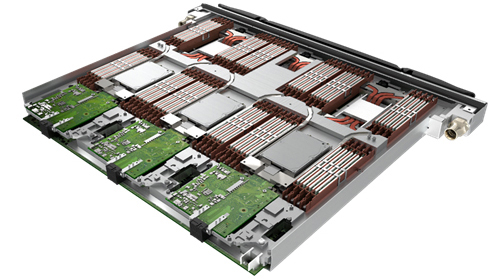

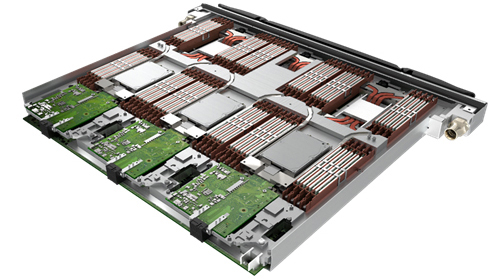

Single node Da Vinci blade, based on the BullSequana X2135 composed of:

- 1x 32-core Intel Xeon Platinum 8358 CPU, 2.6 GHz (Icelake)

- 8x 64 GB DDR4-3200 (512 GB)

- 4x NVIDIA custom Ampere A100 GPU 64 GB HBM2

- 2x dual-port HDR network interface (400 Gbps aggregated)

1536 nodes (172032 CPU cores)

Three-node BullSequana X2140 blade, each node with:

- 2x 56-core Intel Xeon Platinum 8480+ CPU, 2.0 GHz (SapphireRapids)

- 16x 32 GB DDR5-4800 (512 GB)

- 1x SSD 3.84 TB M.2 NVMe

- 1x single port HDR100 network interface (100 Gbps)

Computing Partitions

Leonardo provides to users two compute modules:

- a Booster module, which purpose is to maximize the computational performance. It was designed to satisfy the most computational-demanding requirements in terms of time-to-solution, while optimizing the energy-to-solution. This result is achieved with 3456 computing nodes, each equipped with four NVIDIA A100 SXM4 64GB GPUs driven by a single 32-core Intel Ice Lake CPU.

- A Data Centric General Purpose (DCGP) module aiming to satisfy a broad range of applications. Its 1536 nodes are equipped with two Intel Sapphire Rapids CPUs, each with 56 cores, and reaches over 9 petaFLOPS of sustained performance.

All the nodes are interconnected through an Infiniband HDR interconnect network organized in a Dragonfly+ topology.

3456 nodes (13824 GPUs)

Single node Da Vinci blade, based on the BullSequana X2135 composed of:

- 1x 32-core Intel Xeon Platinum 8358 CPU, 2.6 GHz (Icelake)

- 8x 64 GB DDR4-3200 (512 GB)

- 4x NVIDIA custom Ampere A100 GPU 64 GB HBM2

- 2x dual-port HDR network interface (400 Gbps aggregated)

1536 nodes (172032 CPU cores)

Three-node BullSequana X2140 blade, each node with:

- 2x 56-core Intel Xeon Platinum 8480+ CPU, 2.0 GHz (SapphireRapids)

- 16x 32 GB DDR5-4800 (512 GB)

- 1x SSD 3.84 TB M.2 NVMe

- 1x single port HDR100 network interface (100 Gbps)

Storage

The storage system features a capacity and fast tier. This architecture allows great flexibility and the ability to address even the most demanding I/O use cases in terms of bandwidth and IOPS. The storage architecture, in conjunction with the booster compute node design and its GPUDirect capability, increases the I/O bandwidth and reduce I/O latency towards the GPUs, therefore improving the performance for a significant number of use cases.

The fast tier is based on DDN Exascaler and acts as a high performance tier specifically designed to support high IOPS workloads. This storage tier is completely full flash and based on NVMe and SSD disks therefore providing high metadata performance especially critical for AI workloads and in general when many files creation are required. A wide set of options are available in order to integrate fast and capacity tier in order to make them available to end users.

5.7 PB full flash

31x DDN appliance ES400NVX2 configured with:

- 24x SSD 7.68 TB NVMe with encryption support (184.3 TB)

- 4x InfiniBand HDR ports (800 Gbps aggregated)

- metadata resource included

137.6 PB

31x DDN appliance ES7990X configured with:

- 1 Controller head (82 disks) + 2 expansion enclosures (SS9012, 164 disks)

- 246x HDD 18 TB SAS 7200 rpm (4.4 PB)

- 4x InfiniBand HDR100 ports (400 Gbps aggregated)

4x DDN appliance SFA400NVX for metadata (322 TB), configured with:

- 21x SSD 3.84 TB NVMe with encryption support (80.8 TB)

- 8x InfiniBand HDR100 ports (800 Gbps aggregated)

5.7 PB full flash

31x DDN appliance ES400NVX2 configured with:

- 24x SSD 7.68 TB NVMe with encryption support (184.3 TB)

- 4x InfiniBand HDR ports (800 Gbps aggregated)

- metadata resource included

137.6 PB

31x DDN appliance ES7990X configured with:

- 1 Controller head (82 disks) + 2 expansion enclosures (SS9012, 164 disks)

- 246x HDD 18 TB SAS 7200 rpm (4.4 PB)

- 4x InfiniBand HDR100 ports (400 Gbps aggregated)

4x DDN appliance SFA400NVX for metadata (322 TB), configured with:

- 21x SSD 3.84 TB NVMe with encryption support (80.8 TB)

- 8x InfiniBand HDR100 ports (800 Gbps aggregated)

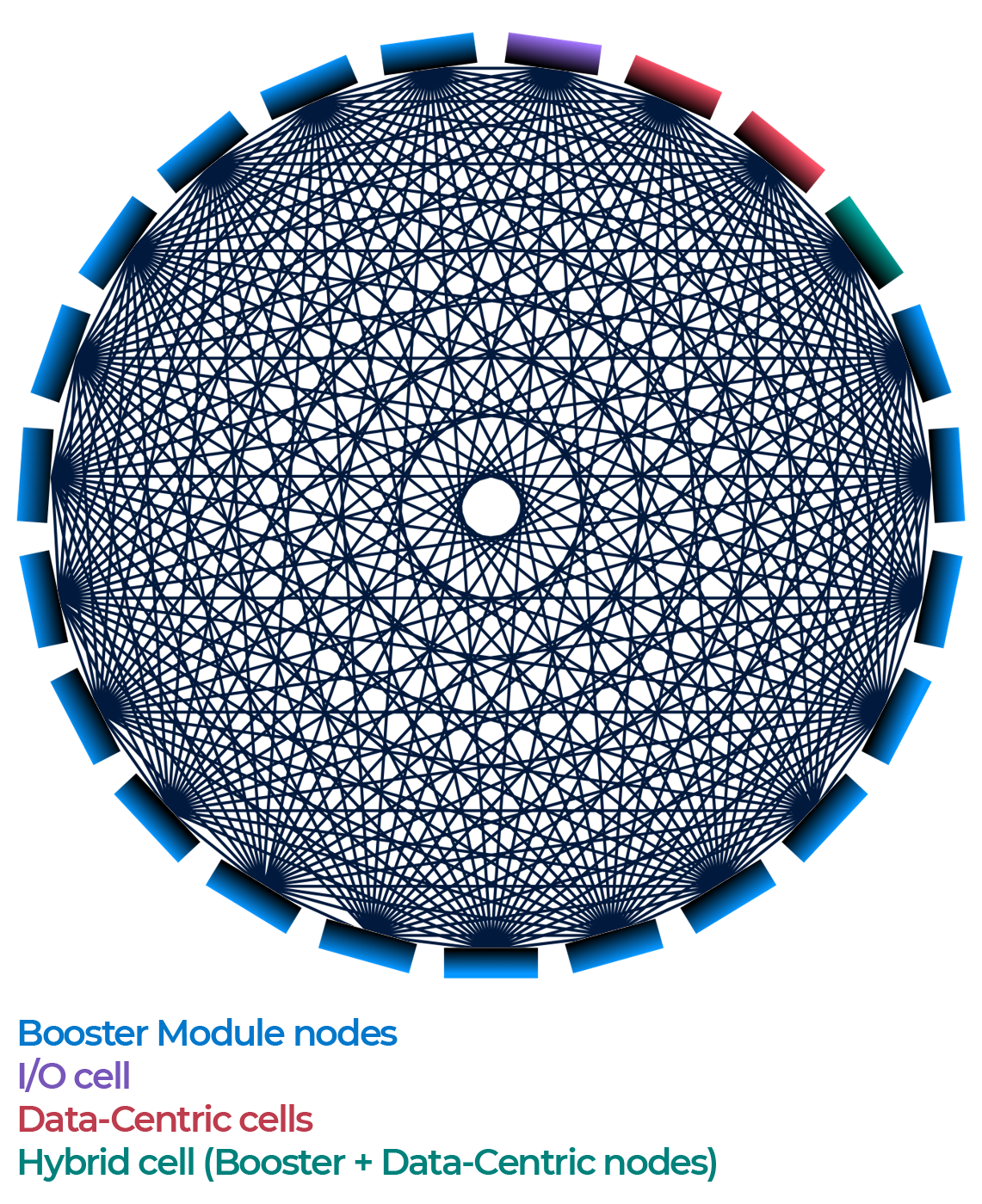

Data Network

The low latency high bandwidth interconnect is based on a NVIDIA HDR solution and features a Dragonfly+ topology. This is a relatively new topology for Infiniband based networks that allows to interconnect a very large number of nodes containing the number of switches and cables, while also keeping the network diameter very small.

In comparison to non-blocking fat tree topologies, cost can be reduced and scaling-out to a larger number of nodes becomes feasible. In comparison to 2:1 blocking fat tree, close to 100% network throughput can be achieved for arbitrary traffic. Leonardo Dragonfly+ topology features a fat-tree intra-group interconnection, with 2 layers of switches, and an all-to-all inter-group interconnection.

Leonardo data network solution comes with improved adaptive routing support, which is crucial for facilitating high bisection bandwidth through non-minimal routing. In fact, intra-group routing and inter-group routing need to be balanced to provide low hops count and high network throughput. This is obtained with routing decisions evaluated in every router on the packet’s path and allows a minimum network throughput of ~ 50%.

This system gives the computational capacity to realise bandwidth demanding visualisations combined with fast access to data, such as 3D applications. 16 additional nodes are equipped with 6.4 TB NVMe disks and 2 NVIDIA Quadro RTX8000 48GB to be used as visualization nodes.

Data Network

The low latency high bandwidth interconnect is based on a NVIDIA HDR200 solution and features a Dragonfly+ topology. This is a relatively new topology for Infiniband based networks that allows to interconnect a very large number of nodes containing the number of switches and cables, while also keeping the network diameter very small.

In comparison to non-blocking fat tree topologies, cost can be reduced and scaling-out to a larger number of nodes becomes feasible. In comparison to 2:1 blocking fat tree, close to 100% network throughput can be achieved for arbitrary traffic. Leonardo Dragonfly+ topology features a fat-tree intra-group interconnection, with 2 layers of switches, and an all-to-all inter-group interconnection.

Leonardo data network solution comes with improved adaptive routing support, which is crucial for facilitating high bisection bandwidth through non-minimal routing. In fact, intra-group routing and inter-group routing need to be balanced to provide low hops count and high network throughput. This is obtained with routing decisions evaluated in every router on the packet’s path and allows a minimum network throughput of ~ 50%.

This system gives the computational capacity to realise bandwidth demanding visualisations combined with fast access to data, such as 3D applications. 16 additional nodes are equipped with 6.4 TB NVMe disks and 2 NVidia Quadro RTX8000 48GB to be used as visualization nodes.

ENERGY EFFICIENCY

Leonardo is equipped with two different software tools enabling a dynamical adjustment of power consumption: Bull Energy Optimiser keeps track of energy and temperature profiles via IPMI and SNMP protocols. Such tools can interact with Slurm scheduler to tune some of its specific features, like a selection of the jobs based (also) on the expected power consumption or a dynamical capping of the CPUs frequencies based on the overall consumption.

This dynamical tuning procedure is enhanced by a second tool called Bull Dynamic Power Optimiser, which monitors the power consumptions core by core in order to cap frequencies to the value which grants optimal balance between energy saving and performance degradation for the running applications.

Regarding the GPU power consumption, NVIDIA Data Centre GPU Manager is provided allowing to scale down the GPUs clocks when it overcomes a custom threshold.

performance overview

Leonardo is designed as a general-purpose system architecture able to serve all scientific communities and satisfy the needs of R&D industrial customers.

Scalable and high-throughput computing typically refer to scientific use cases that require large amount of computational resources either through highly parallel simulation runs on large scale HPC architectures or by launching a large numbers of smaller runs to evaluate the impact of different parameters. Leonardo system is expected to support both models by providing a tremendous speed-up for workloads able to exploit accelerators.

Leveraging the booster architecture, early benchmarks figures report a 15-30x time-to-science improvement for applications already ported on NVIDIA GPUs (QuantumEspresso, Specfem3D_Globe, Milc QCD) compared to Cineca Tier-0 system Marconi-100.

Examples of applications used in production on Marconi-100 (NVIDIA V100 based) can be found here.

The number of applications able to run on GPUs is increasing day by day, thanks to the diffusion of specific programming paradigms and supported by a growing ecosystem (EU Centers of Excellence, Hackathons, local support of the computing centers).

AI-based applications can leverage state-of-the-art GPUs providing large low precision peak performance, dedicated Tensor cores and a system architecture designed to support I/O bound workloads thanks also to NVIDIA GPUDirect RDMA feature and the storage fast tier.

For more details, please refers to the article at the following link.